I had to buy a new hard drive for my array recently, which meant verifying that it works before I put it into service.

I don’t do burn-in tests of drives. Drives have a bathtub curve for reliability, like most components, but I find that if a drive is failing, it will start exhibiting performance problems that a thorough testing reveals.

Running a sufficiently long burn-in is increasingly impractical. A burn-in would probably involve writing and reading everywhere on the disk multiple times, and disks have been getting bigger and bigger. Denser platters help with sequential speeds, but I’d estimate it would take several days to burn in a new drive.

Software

Only four pieces of software are needed: dd, smartmontools, bonnie++, and gnuplot. dd is an integral part of Unix and Linux, and both smartmontools and bonnie++ have been packaged on all common distributions for ages. gnuplot is similarly common, but isn’t needed on the machine doing the testing, just on a machine for analysis.

S.M.A.R.T. status testing

S.M.A.R.T. is a way to get information from the drive that is supposed to give warning before failure. It works well for some types of drive failure, but doesn’t work at all for others.

After putting the drive in a tray and installing it, the first thing I do is check that the drive appears with dmesg, and get the name for it. In this case, it was /dev/sdn. If this doesn’t work, something is very wrong with the drive or motherboard.

With dmesg | grep sd I’m looking for something like this

1 2 3 4 5 6 7 | |

With this information, I check S.M.A.R.T. with smartctl -a /dev/sdn. The disk information should match what the disk is, and no errors should be reported. After seeing that the drive responds, I run a short self-test with smartctl -t short /dev/sdn and wait a couple minutes. I then run smartctl -a /dev/sdn again and check the self-test log, where it should report a test was completed without error.

I also installed another drive which showed up as sdo

Sequential performance

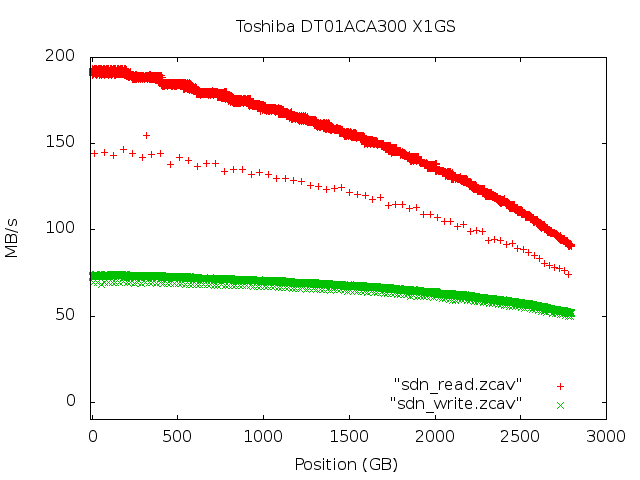

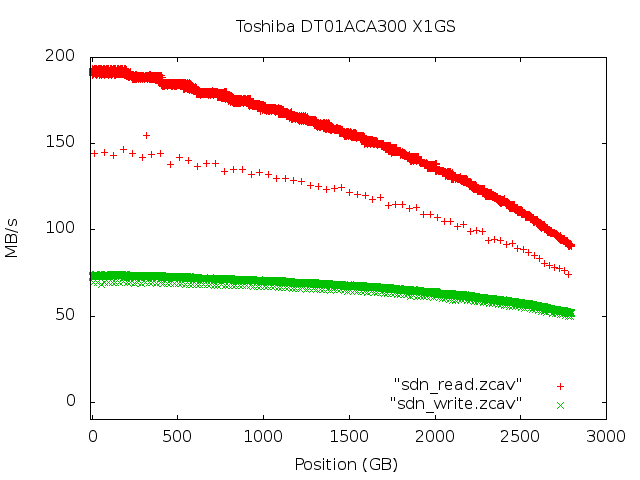

Next I perform a test of sequential read and write speeds using zcav, a program that comes with bonnie++. The read test is performed with zcav /dev/sdn > sdn_read.zcav and the write test with zcav -w /dev/sdn > sdn_write.zcav. The write test will destroy any data on the disk, so only do this on a new disk or one with no data you care about.

Each of these will go over the entire disk, reading or writing 512MB at a time in 1MB blocks.

Each test takes about a day for the drives I just got, and they create a file with raw speed data. When they’re finished, you’ll get a line like # Finished loop 1, on device /dev/sdn at 22:40:16.

To visualize this data, I use gnuplot.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

Read and write testing

Read and write testing

Read and write testing

Read and write testing

This immediately reveals something interesting. The write speed is about half of the read speed, but looking at reviews online that tested with other IO benchmarks, both should be about the same. When I started this blog post, I intended this to be a quick write-up for testing disks, but it’s also going to cover read-ahead and drive settings.

There’s a few ways to run check performance with dd which reveal the difference between read and write speeds.

The most basic write test is sync; dd if=/dev/zero of=/dev/sdn bs=1M count=512 conv=fdatasync. Again, this will destroy data on the drive. The first sync statement is to make sure any write buffers are clear, and the conv=fdatasync makes sure the data is synced to disk before dd exits. If it didn’t do this, it would report absurdly high speeds for small writes that fit in cache. For my drives it reports 173.1 MB/s and 179.0 MB/s.

Another way to call dd is sync; dd if=/dev/zero of=/dev/sdn bs=1M count=512 oflag=dsync. This syncs after each 1M block, but writes the same amount of data total. This is what zcav does. My drives get 72.0 MB/s and 72.5 MB/s, in agreement with zcav. If I reduced the block size, it’d be much slower.

A read test with dd is dd if=/dev/sdn of=/dev/null bs=1M count=512. Because it’s reading, there are no syncs involved. My drives get 188.2 MB/s and 194.5 MB/s

There are a couple of settings that can be adjusted, write caching and read-ahead, both of which are on by default. A third is look-ahead, which shouldn’t be adjusted.

Write caching is using a small amount of RAM on the disk to store writes before the drive can get them onto the spinning platter. This sounds good, but can result in data loss if power is lost before the drive can write out to disk.

Read-ahead is when the OS will request multiple sectors when it needs data from disk in order to add the next data to the cache. It dramatically increases sequential performance, but can degrade random performance..

Both can be disabled at once with hdparm -W0 -a0 /dev/sdn, or turned on with hdparm -W1 -a256 /dev/sdn.

The write cache doesn’t show a significant difference with 1MB block sizes, but without read-ahead, the read rate is 75 MB/s.

Write operation speed

A way to test write operation speed is sync; dd if=/dev/zero of=/dev/sdn bs=8K count=10000 oflag=dsync. Dividing the count by the time taken gives operations/second, and should come out very close to 120 for 7200 RPM drives, as they rotate 120 times per second.

Random reads

Bonnie++ is the standard tool for testing random IO performance, but it requires a filesystem to make temporary files on. We can make one with

1 2 3 4 5 | |

Bonnie++ doesn’t run as root, so I change owner of /testpart and continue as my normal user

1 2 | |

This runs bonnie++ with 4 threads, skips some useless tests, and uses twice the RAM size for tests, in this case 64GB.

The output can be a bit cryptic as it tries to fit too much into narrow columns.

1 2 3 4 5 6 7 8 9 10 11 | |

The comma-separated string at the bottom can create a nice display with bon_csv2html, but the important numbers are that it had 185 MB/s on the sequential write test, 93 MB/s on the sequential rewrite test, 245 MB/s on the sequential read test, and 321.7 seeks/s on the random test. The sequential tests correspond to tests with dd or zcav, except that they make better use of the operating system memory cache.

The number of threads doesn’t matter too much on a single storage volume, but the size used is significant. If I double it to 128GB the sequential read test decreases to 211 MB/s and random to 189.2 seeks/s. This shows the importance of RAM cache, but either size acts as a drive test.

Retesting S.M.A.R.T.

After all of this testing, I have the drive test itself again with a SMART long self-test.

1

| |

This takes a few hours. After it’s done, I see a new entry in the test log

1 2 3 4 | |