After my past testing of ZFS I thought that there might be more performance gains by switching the xlog ZFS volume recordsize from 128K to 8K. Doing this for the tablespace used for data caused a time decrease of 16% and allowed additional gains from compression, so it seemed logical to test.

Using same server as before I ran a new import, taking care to make it comparable to my previous benchmarking.

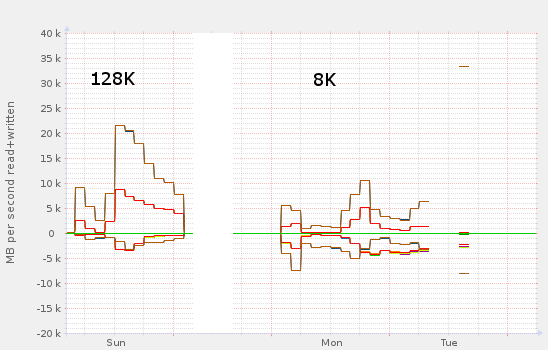

Results

Instead of being faster, it was substantially slower with a 8K xlog volume recordsize, and the import failed to complete.

| recordsize | 128K | 8K | |

|---|---|---|---|

| Processing | node | 1514 | 2011 |

| way | 5938 | 11296 | |

| relation | 14716 | 33249 | |

| Total | 22168 | 46556 | |

| Pending ways | 8889 | >135 000 | |

| Total | 77441 | >193 000 | |

Aside from being slower at every stage, the machine became unusable while creating the GIN index and eventually stopped responding, requiring me to log in with IPMI and restart it before it had completed. The munin graphs are incomplete as the machine stopped responding to the munin master requests to gather data.

For whatever reason, 8K recordsize is completely unusable.

On the right side you can see interrupted lines where the server was not reliably responding to the munin master.